Artificial intelligence has transformed many work processes, and businesses have rushed to implement AI-powered solutions. However, adopting AI technology also raises various concerns about data privacy, security, misuse, and objectivity.

To address the mentioned issues and many others, the AI TRiSM was created. It’s become a robust tool for enhancing AI models and a solid basis for AI policies.

Table of Contents:

What is AI TRISM?

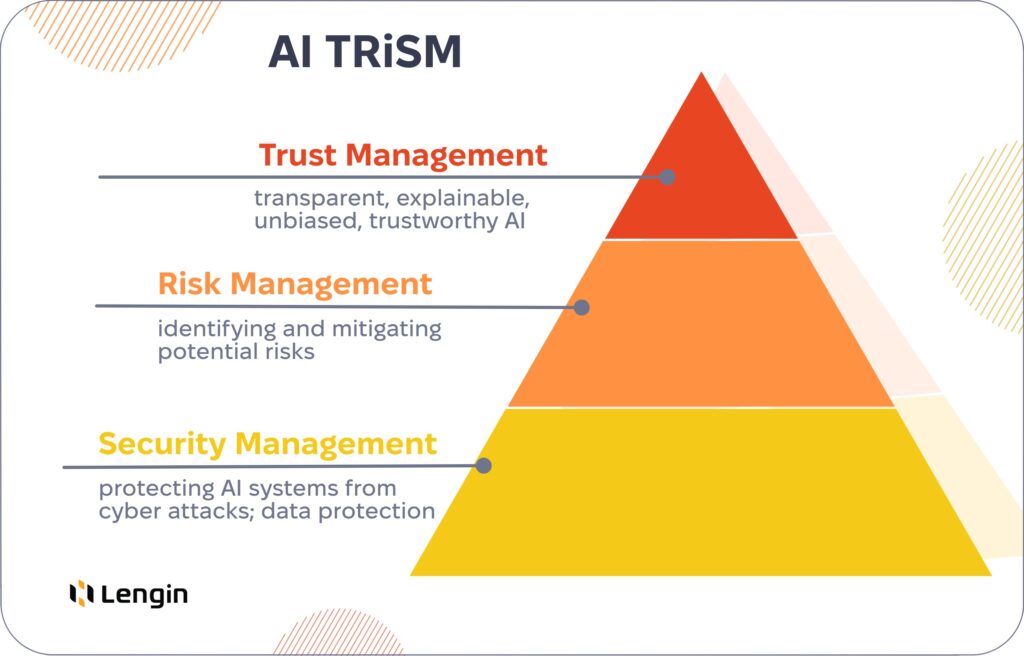

TRiSM stands for trust, risk, and security management. It’s a framework for building and keeping AI models fair, trustworthy, transparent, unbiased, and reliable. It consists of three fundamental parts represented in the name.

Trust Management

It isn’t easy to trust something you can’t see.

All the AI algorithms of work are a black box for most users. You input the question, then something is happening, and you have your answer. People treat something they don’t understand with suspicions.

That’s why the questions like “How can I be sure that answer is correct?”, “Is this answer objective?” or “How did AI arrive at such a conclusion?” are increasingly emerging.

The system must be able to prove the correctness of the answer and explain its actions so users trust it more. This way, transparency, and accuracy are top priorities of modern AI models.

Risk Management

What are the risks of using AI? The rise of the machines? We are talking about data privacy, confidentiality, and security, as well as the concerns mentioned above that make trusting AI impossible.

AI risk management involves identifying those risks and developing preventive measures or mitigating strategies. Without exaggeration, this part is the most difficult. People are creative, and predicting everything that AI can be involved in is impossible.

Deepfakes, applications for removing clothes from people in the photo, and generated voice messages of relatives and friends desperate for financial help are a reality. Risk management is about preventing people from using AI for purposes that contradict the law and moral norms.

Security Management

AI systems often undergo various cyber attacks. The most significant threat is data breaches. AI systems contain enormous amounts of data, including sensitive information, and any leaks or unauthorized access to this data can lead to the theft of intellectual property, financial loss, reputational damage, identity theft, and more.

Also, AI security management works on both sides. It protects AI from humans as well as humans from AI. Recently, the number of AI-powered cyberattacks has increased significantly.

The most common aim is the financial industry. Implementing blockchain technology helps protect the data and transactions, but there is no guarantee that AI will not be developed enough to overcome even that.

One more AI security management responsibility is ensuring that AI-powered security tools are themselves secure.

To fix all those principles, many companies that develop or implement AI solutions design AI policies.

AI Policy

AI Policy is a document that serves as a guide with principles and regulations for developing, sharing, and using Artificial Intelligence. This policy is designed to mitigate the list of concerns related to AI.

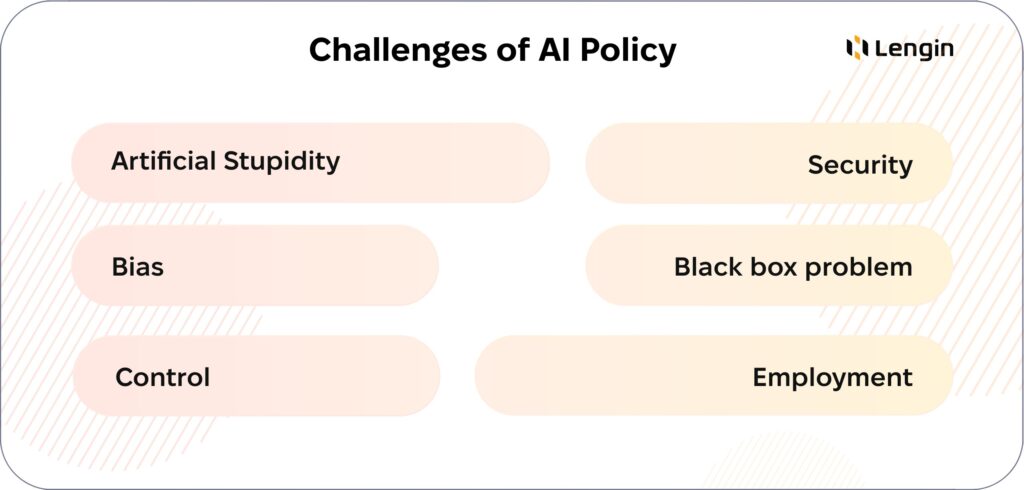

Artificial Stupidity and Employment

Everything possible is automated. What’s next?

AI was named the most significant facilitator of unemployment, but is it so? Do we really no longer need humans? The answer is that we do, and the main reason is “artificial stupidity.”

AI models make mistakes, so humans are here to validate and check the responses. Artificial intelligence is a great helper in decision-making, and there are even cases when this is fully delegated to AI — for example, self-driving cars.

A more common example to understand that “artificial stupidity” is a real issue is the captcha. Yes, these annoying check-ins on whether you are a robot or not are now powered by AI, too. Remember how many times you’ve failed to prove that you are human and admit the imperfection of modern AI models.

Control Over AI

Controlling a system that learns by itself is quite a challenge. AI finds, processes, and generates the data without human oversight. The only way to guide AI development in the right direction is by filtering the data it consumes.

It’s similar to parenting. Look at AI as a child. If we give it all the information in the world without telling how to use it in a way that will not hurt people, we will have an uncontrollable weapon.

Talking about weapons. The arms race is another global concern. AI-powered cyber attacks already happen every day. What about terrorist attacks with AI-controlled weapons?

That’s why AI security management includes user authorization and limited access for some regions. Such measures are necessary to prevent AI from getting into the wrong arms.

Bias

Since AI models are trained on data from the real world, the amount of biased information is enormous. One of the purposes of trust management in the TRiSM framework is to ensure that all responses are free from superstitions and discrimination.

As a result of effective trust management, AI models are enhanced with filters based on keywords used in biased contexts the most. Ask any AI language model for curse words, and you will get a polite refusal.

The same filtering happens when an AI system generates the response so users get the most objective information. For the same reason, AI systems have no personalization because they aim to tell the truth, not only something you want to hear.

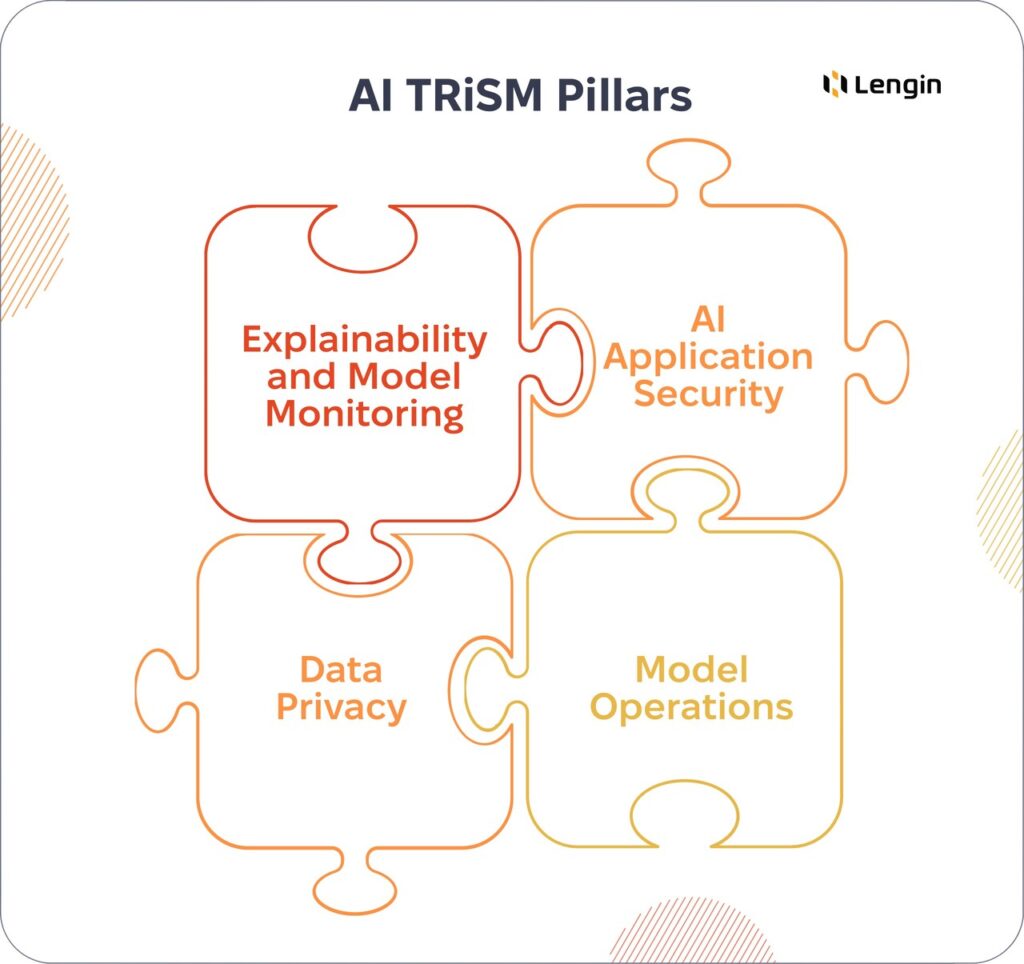

4 Pillars of AI TRiSM

Model Monitoring and Explainability

This pillar focuses on the ability to understand and interpret the decisions made by AI models. This way, the black box problem is erased. People understand what data an AI system uses for providing answers, why it filters some information, whether the system will use their data, and much more that makes users trust the AI.

Also, such transparency helps monitor the issues related to disturbing content and biases, for example. That’s why most modern AI systems have the option of reporting bugs or complaining, where all users can contribute to making AI better. This little option is a powerful tool for facilitating user trust and system monitoring.

ModelOPs

ModelOPs or AI model operations pillar is aimed at effectively managing the entire lifecycle of AI models, from development to deployment, and ensuring that the models perform optimally.

This includes monitoring model performance, updating, and ensuring that models are used according to organizational policies and regulations.

AI Application Security

AI Application Security focuses on implementing security measures for AI applications and the data they process. It will protect AI systems and all their data from cyber threats.

AI security management includes implementing access controls, encryption, and other security measures to prevent unauthorized access to AI systems and data.

Data Privacy

To ensure the privacy of the personal user data that they input for authorization and during registration, as well as data used for training the AI model, AI security management uses various approaches — for example, encryption, data minimization techniques, data masking, and data perturbation.

Why do CTOs need to consider implementing AI TRiSM?

The AI TRiSM has become the basis for the Blueprint for an AI Bill of Rights published by The White House, but why should CTOs consider its implementation a practical decision for the business?

Cost Savings

AI systems can fail, leading to significant expenses associated with repairing or replacing the system, as well as potential reputational damage.

Eliminate Security Risks

Cybersecurity is named one of the top priorities by 75% of companies surveyed by the World Economic Forum. Fortunately, AI security management lies in the foundation of the TRiSM framework.

The Trust Of Users

The more users trust your AI model, the more clients you have and the more revenue you make. Simple logic that works.

Transparency and understanding of made decisions make users and stakeholders trust your AI model and how you use it for company matters.

Maximizing Value From Data

AI TRiSM is a basis for ensuring data quality and its effective processing. The AI model can generate more accurate and insightful responses when you provide objective and secure data.

Impact on Reputation

Remember what happened when the question about ChatGPT’s data privacy emerged? OpenAI lost the trust of users, which caused the domino effect. After tremendous reputational and, consequently, financial losses, the AI model faced legal regulations and limitations on the territory of many European countries.

On the other hand, trustworthy AI is difficult to create, but it definitely boosts the company’s reputation.

Where to Start to Implement AI TRiSM?

If you are considering implementing AI TRiSM, you should analyze the following resources that are already working and showing incredible results:

- Gartner Trust, Risk and Security Management (TRiSM) framework that provides a set of guidelines. They will help you understand the TRiSM better.

- National Institute of Standards and Technology (NIST) AI Risk Management Framework is focused primarily on risk and security management.

- Microsoft Responsible AI Framework and Google AI Principles cover the principles of AI systems’ development and deployment processes.

- World Economic Forum (WEF) Principles of Responsible AI is a list of recommendations that can serve as a solid foundation for developing your AI policy.

Key Takeaways:

- AI TRiSM consists of three components (trust, risk, and security management) and stands on four pillars (explainability or model monitoring, AI model operations, AI application security, and privacy).

- AI TRiSM was created to address problems of prejudice, lack of control over AI, artificial stupidity, and many others.

- Key benefits of implementing TRiSM are cost savings, elimination of security risks, obtaining users’ trust, maximizing data value, and positive impact on reputation.